Emotions: the missing element in artificial intelligence?

Artificial intelligence (AI) has become an integral part of contemporary life. Within a fraction of a second, navigation applications can map out routes to any destination we like and even predict the traffic on the way. Online shops, streaming services and advertisements on social media seem to know us better than our closest friends.

AI systems are good at their jobs. That is, when the job is contained within a safeguarded space with reduced uncertainties. When situations become more complex, however, AI systems cannot cope. Our trusted navigation system will reroute endlessly in its confusion about a road closure. Your favorite online shop will start recommending goods for a middle-aged male after your Father's day order of men's socks.

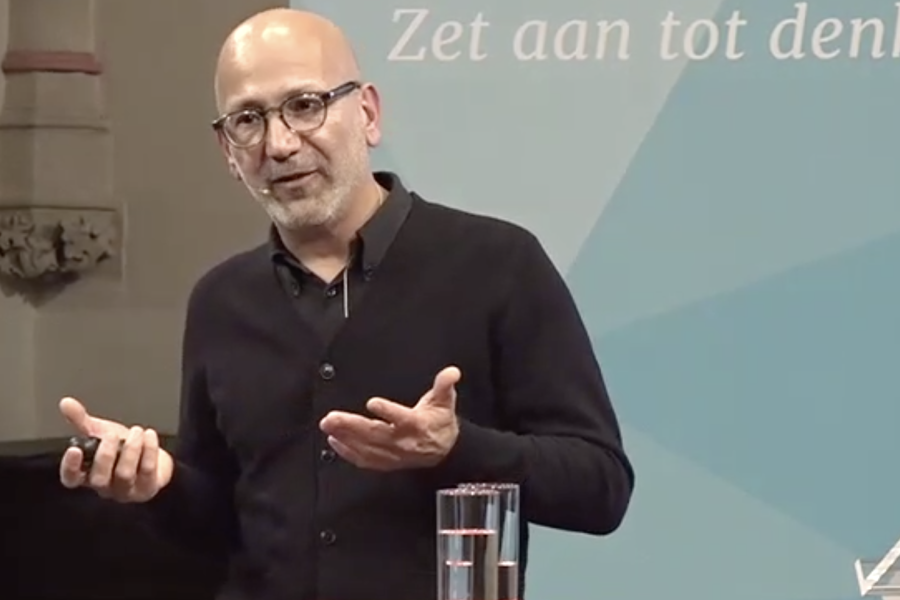

According to computer scientist and philosopher Prof Mehdi Dastani, if we want AI systems to autonomously perform tasks that require human intelligence, we need them to be adept at managing the uncertainty inherent to the real world.

The logic behind uncertainty

In order to think about how AI could deal with uncertainty, we need to take a closer look at how logic works. We reason about the world constantly, moving from observations and assumptions to logical consequences. If it is raining, we are likely to get wet if we go outside. To be sensible, we bring an umbrella or wear a raincoat to stay dry. So, logical reasoning allows us to predict the consequences of our actions.

Steps in logical reasoning, so-called inferences, can be based on true assumptions. For example: if all men are mortal and Socrates is a man, then Socrates is a mortal. The first assumption is true, therefore the inference that follows can also be known to be true. This is considered 'knowledge'.

In the real world, however, such true assumptions are rare. More often than not, the inference is made with a default assumption, like: if birds typically fly and a dove is a bird, then doves possibly fly. This involves a higher degree of uncertainty: there are also birds that do not fly. Therefore, the inference that doves possibly fly is not a fact, but a 'belief' based on the likelihood that the statement is true. Reasoning about the world is mostly based on such beliefs, not on knowledge.

Emotions are complementary to rationality

What do AI systems need in order to deal with these uncertain beliefs? To answer such questions, scientists often look to animals that have the ability naturally. In their aspiration to fly, inventors observed birds in flight: their aerodynamic build, the flapping of the wings. The resulting conceptualisation is the airplane which has gone on to outdo birds in speed, distance and ability to carry cargo during flight.

What creatures, then, are most adept at reasoning about uncertainty? We are. In humans, emotions focus our attention on aspects of the situation that are important and relevant and modulate what consequent actions become more likely. For example, if your flat is on fire, actions like taking a shower or enjoying a meal become very unlikely, while getting out of the building as soon as possible becomes the most salient option. Our emotions work with our cognitions to provide the best response.

According to Dastani, this human emotion and cognition mechanism can be modelled in AI to deal with uncertainty. AI systems without emotions are overly rigid. When a goal cannot be reached, the system will pointlessly keep trying until the power supply is drained. With emotions, AI systems would have a more well-adjusted response, argues Dastani. For example, when an objective cannot be achieved, the robot can be programmed to feel "sadness" and decide to abandon the goal. On the other hand, when an objective is at risk of not being achieved but success remains possible, the robot is programmed to feel "fear" and thus try harder. In this way, having "emotions" modulates the robot's response in the face of uncertainty.

Machines of the future

In order to cope with the uncertainties of the real world, AI systems need the human feature of emotions. Emotions are crucial in decision-making in an uncertain world. With the increasing utilization of AI, the complexity is likely to grow as AI systems start to interact with each other in real life scenarios. This human-like social interaction adds another level of emergent uncertainty. What do the other AI systems know and believe? What are they expected to do? Will they deviate from expectation? For now, modelling human emotions in AI is the first step to grappling uncertainty.